shiv wrote:Vivek it's not about your analysis but how the analysis is interpreted and this is something that PR and advertising depts and sales depts anywhere understand.

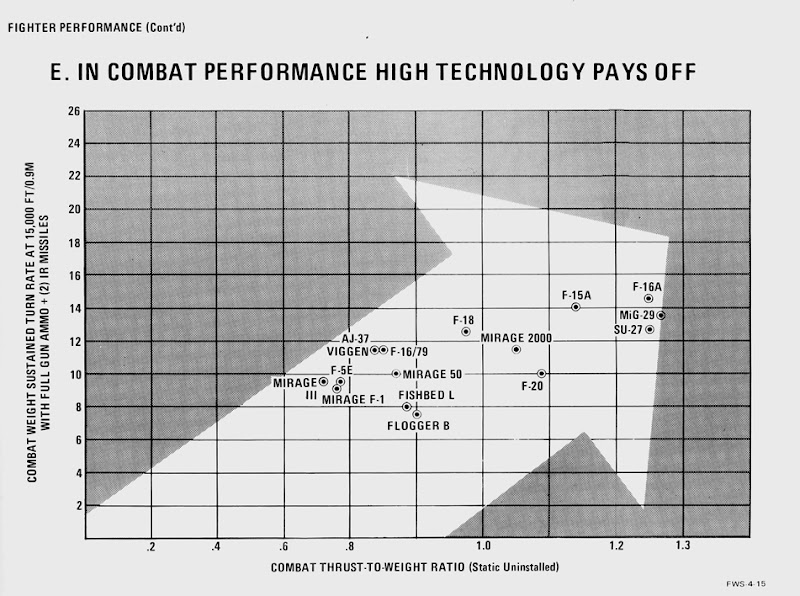

Unless one produces a graph in which every parameter of the LCA exceeds every parameter of every other aircraft, 99% of people are going to say that it is a useless aircraft, fuelling the media and helping non techies in the IAF to do their hackthoo. It is OK for eggheads and academics to look at paper comparisons and do the fancy math, but the "in your face" conclusion that has been reached on this very page is that the LCA is not as good as the Mirage 2000 even though it is 20 years younger because 99% of BRFites cannot actually understand how you got the results, but they can understand the results. That is the "bad news'.

Right. Therein lies the problem that I wonder about as well. The problem with any quantitative analysis is that while the creator of the plots knows where the numbers are coming from, how do you convince the users of their veracity?

Its a very fair argument. And to which I have no easy answer, short of writing a peer-reviewed paper on the same. Even then, the peers would have to review the code and the output to be 100% sure of the analysis.

I don't know, but thinking out loud, does it make sense for other prominent BRF users to become proficient at using FlightStream so that one analysis can be vetted by others?

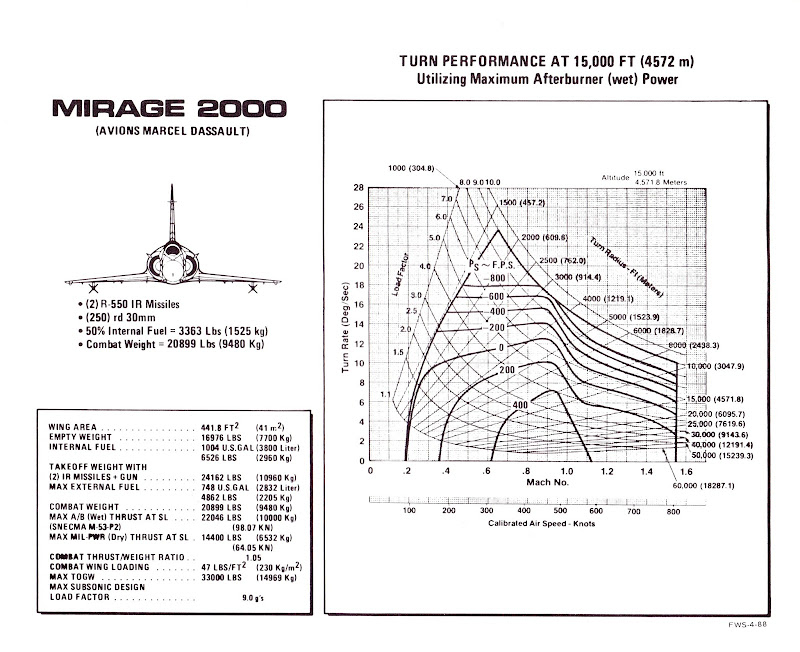

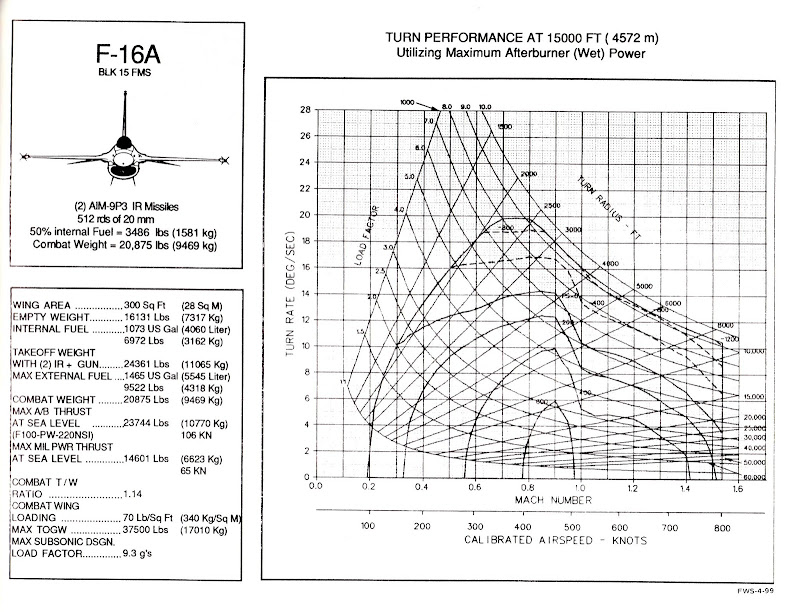

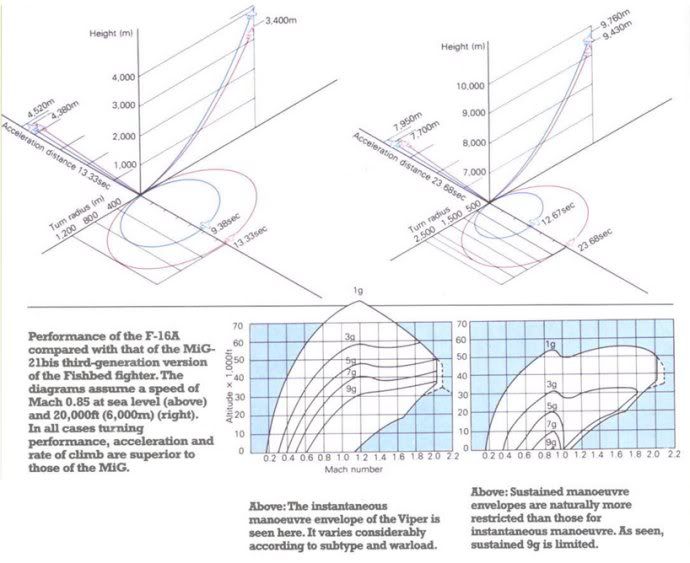

shiv wrote:In operational service aircraft are typically used where their performance can score over anything that goes over them in other situations. You know that some huge, heavy bombers at high altitude could outmanoeuvre the needle nosed supersonic fighters that were sent out to catch them. The graph tells the truth, but the shape of the graph can change if you change the context and parameters chosen - and those are the things that are the big variables in real life.

Agreed. Which is why I always emphasize that folks need to be careful of the context under which I try to do an apples to apples comparison. Generalizing the analysis without context is dangerous.

Not to mention, if the data used (like the ADA paper for the LCA data) is outdated and/or just not correct, then we are going on the wrong path and wouldn't know it.

Like I said: difficult questions for which there are no easy answers.

But the question is: under these circumstances, is it better it to have such analysis or not have it at all? If the answer is that we do not want the public perception shifting one way or another without real access to experimental data, then perhaps the correct recourse is for me to not put up such analysis?

Look, I am not adamant on this point. If the plots I put here are seen to be damaging, I will gladly snuggle back into the scenarios dhaga from which I have ventured out. Cerberus awaits, after all!

-Vivek